A Tech Worker's Perspective on Responsible AI

Script for the talk at the RAIL Fellowship Symposium hosted by Digital Futures Lab.

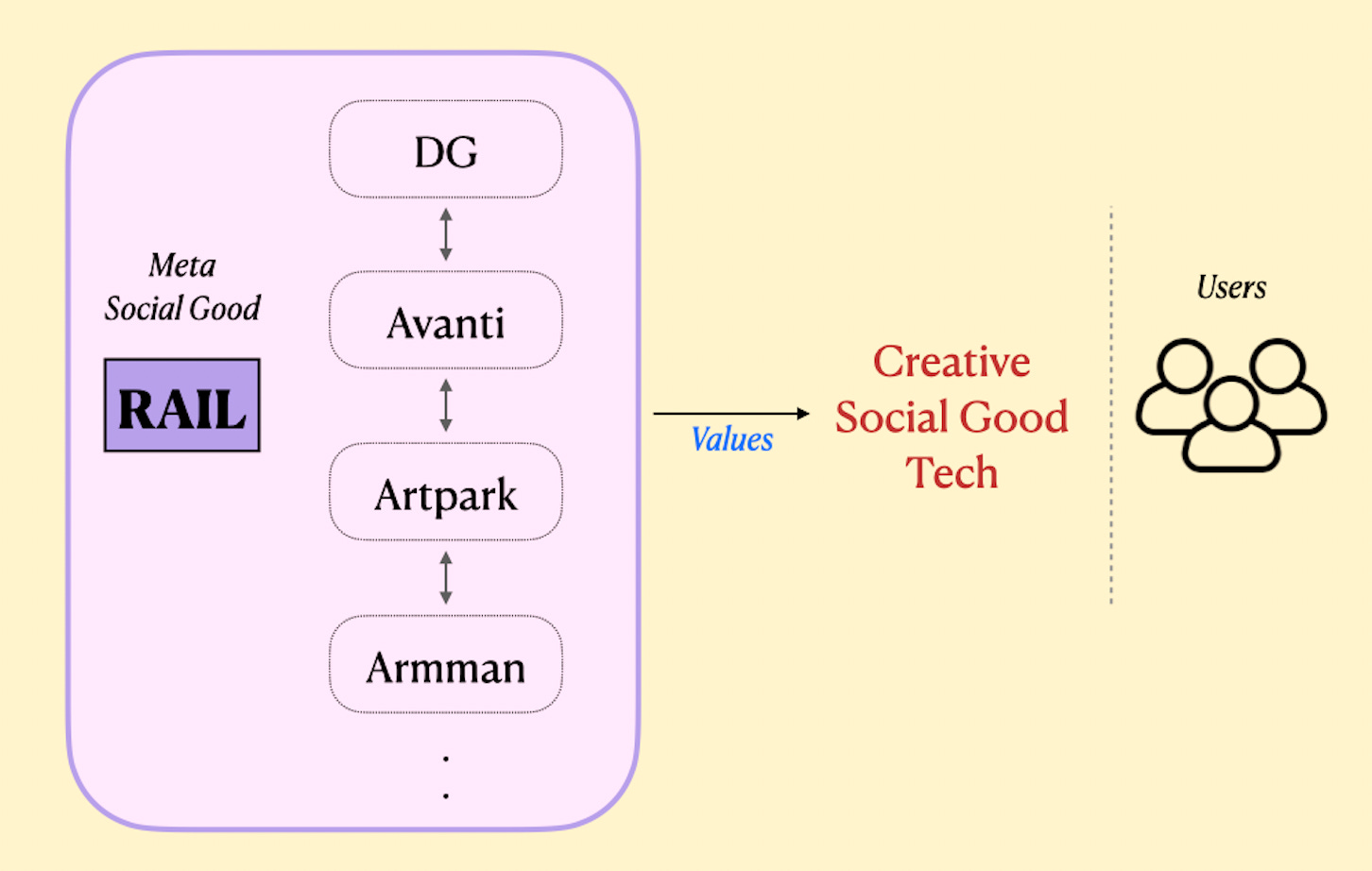

Context: Digital Futures Lab, a non-profit organization based in Goa, focuses on exploring the intersection of technology and society. Avanti was one of fourteen organizations chosen to participate in their inaugural RAIL (Responsible AI Lab) Fellowship. The fellowship's aim is to promote the adoption of RAI principles in the design and deployment of AI systems in India. This talk was presented at the RAIL Symposium, a public event held in Bengaluru, marking the culmination of the fellowship cohort’s learnings and insights. Slides are available here.I’ll be approaching this talk from a tech worker's perspective, and I hope it complements the diverse insights shared by other speakers today.

Let me give you a quick overview of what Avanti does. We undertake a combination of free offline and digital interventions to enhance educational outcomes in India. In particular, we focus on underserved grade 11 and grade 12 students in government schools, helping them excel in competitive exams like JEE and NEET through live classes, assessments, and study material. The idea is that: by entering top colleges and landing well-paying jobs, these students can uplift their families out of poverty.

One of our key digital tools is the Quiz Engine, which is an open-source platform for assessments and homework. It’s designed to mimic the National Testing Agency's (NTA) online exam environment, giving students a realistic training ground for their final exams. The tool is used daily by thousands of students and teachers in public schools across India.

Here’s how it works: Our curriculum team prepares the questions, and the quiz links are shared with students through WhatsApp by teachers and the operations team. My role as part of the tech team is to ensure the engine runs smoothly, especially during large-scale tests. I also assist in analyzing student performance data and pushing for feature improvements.

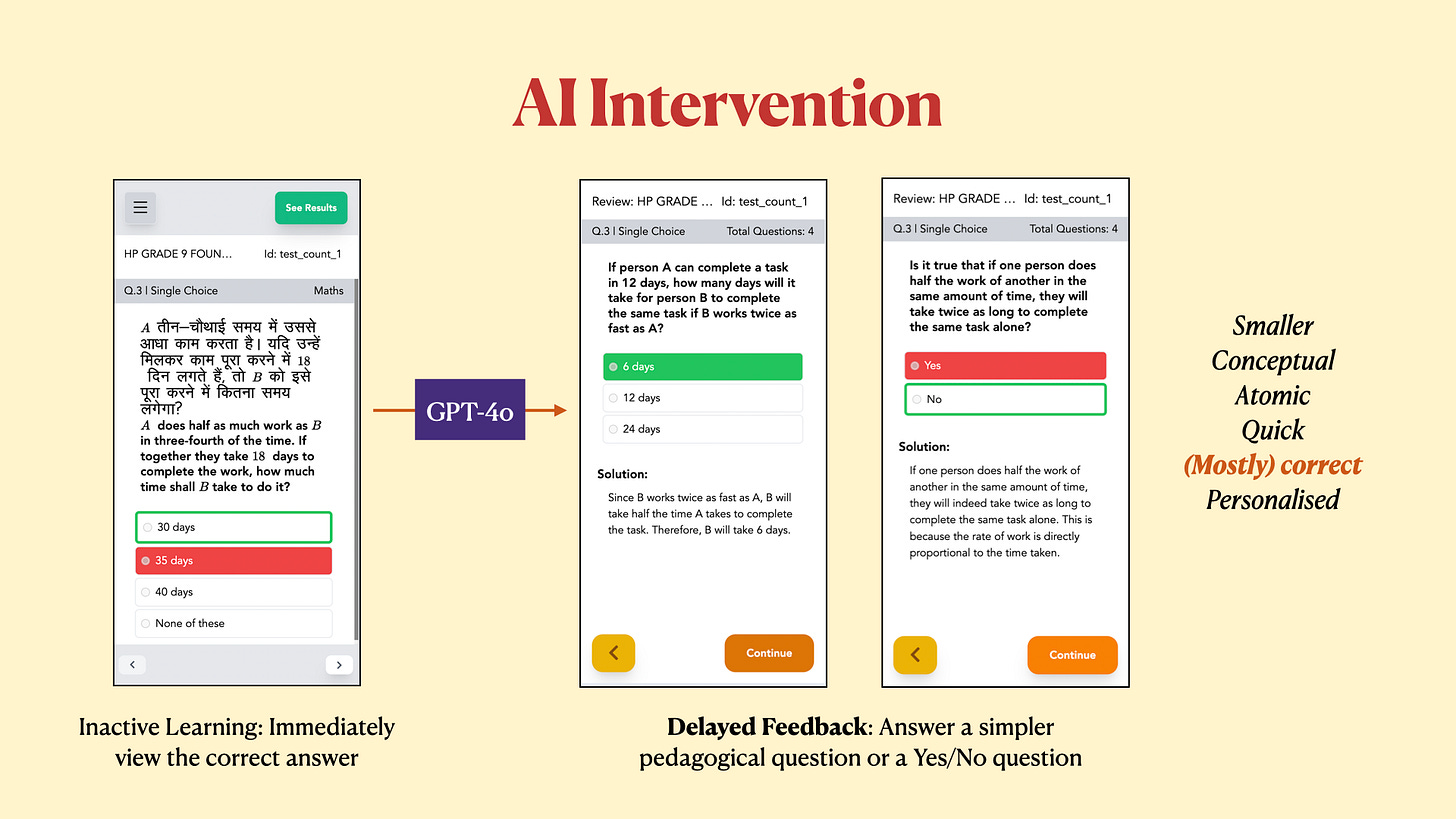

We noticed a recurring issue in our workflow: students rarely revisit the questions they got wrong, perhaps because the process is dull and inactive. This is sad because fixing mistakes and reinforcing those concepts could significantly improve their learning and final exam performance.

One solution is to offer interesting revision questions alongside their report cards after each assessment. But there’s a challenge: the curriculum team is already stretched thin, creating the main questions. Adding the burden of creating personalized revision questions to their workload would be too much.

This is where we saw the potential for AI. The base content comes from questions the curriculum team has already designed. Using GPT, we generated smaller, simpler revision questions — like fill-in-the-blanks or yes/no formats. These are quick, fun, and tailored to help students engage actively and retain memory. For instance, the main math question here contains three to four tricky equations, whereas the revision question requires framing a single core equation.

Admittedly, our AI intervention doesn’t grapple with the kind of pressing ethical dilemmas faced by tools in areas like maternal healthcare. However, even in our seemingly straightforward use case, there are challenges in building responsible AI technology that I believe are worth highlighting.

Once you surf the web for highly cited works in responsible AI practices, you’ll quickly get stuck in the maze of frameworks, or super-long checklists. The points in these lists tend to be generic, too, like: be good, do good, be kind, fair, accountable, interpretable, transparent, nice, and so on. Sure, this is hyperbole, and I’m being polemical here. I respect all the critical work done by the authors mentioned in the slides,12 but this is probably how an ordinary good-faith tech worker would see things. Either it instils a false sense of confidence or pushes away the tech worker from pursuing these directions altogether.

To this end, my engagement in the RAIL Fellowship was fruitful. The one-on-one mentoring sessions, the lectures, and the meetups that we had really made the whole enterprise more approachable to me. I received guided and bespoke advice on responsible practices and I’ll touch upon them in the coming slides.

Alternative Perspectives

First, I had the chance to expand my understanding through alternative perspectives that pushed me to think beyond my immediate task and consider broader implications.

Sarah Newman, who is the director of metaLab at Harvard, shared edtech deployment experiences from her institute. This was invaluable for comparing with my own context in India. I’ll tie this to a concept called the Machine Learner Gap3 – that is, how AI implementations often fail when directly transplanted across regions without adaptation. Sarah’s insights reinforced this concept for me. For instance, I could critically see that the latest tools like the Tutor Copilot, designed for one-on-one digital tutoring, really wouldn’t suit our local realities.

Prof. Neha Kumar’s lecture on ASHA workers offered another powerful lens4. While government teachers are a different group, the parallels are somewhat striking. Often, edtech tools undermine the role of teachers. But, teachers, in fact, provide the foundation for our interventions – their questions and their feedback are what refine and improve our models. So it’s worth asking how they are impacted.5 Prompted by the lecture, I reached out to our teachers and solicited their concerns and comments on our intervention, be it on job security, replacement by AI, or how their efforts can refine our models, etc.

Interface Design

Second, I became more thoughtful about interface design for AI interventions. I had a great interaction with Nishita Gill, Founder of Treemouse, who emphasized the importance of keeping the UI simple so as to not overwhelm. You can see that the revision quiz UI is crisper and less cluttered than the main quiz UI.

We also discussed moving away from chat-like interfaces, which may seem engaging but often introduce too many interaction points, ending up with confused users. I feel we limit ourselves to a myopic view of LLMs solely in a chat-like environment. I think there is incredible untapped potential in interfaces with precomputed predictions.

Another concern is the hidden operational costs of AI. While it may seem initially that AI reduces workload, it can quietly take over as a core feature, requiring significant effort. Grievance redressal, for instance, can become very challenging for small teams if not planned well.

Open Source Implementation

I’ve had my reservations about open source in the past, especially the risk of copycats. But Tarunima Prabhakar’s lecture really shifted my perspective. Her phrasing of care as a necessary ethic stuck with me. Open source inherently pushes us to be more careful. Knowing that our work is out there fosters a sense of accountability.

It’s also a great way to attract talent and build capacity, especially in NGO contexts. We’ve had excellent contributions from interns in our tools. While it may feel like an overreach, there’s hope that open-source code can spread values and inspire meaningful action in those who engage with it.

Lastly, along with code, I believe there’s no shame in sharing negative results or failures openly; if anything, it encourages critical thinking and also acts as a kind of anti-marketing6 to reduce AI hype.

Okay, so I want to briefly talk about Prof. Dinesh Mohan from IIT Delhi, who recently passed away. Prof. Mohan was a relentless advocate for road safety, compulsory helmets, reducing car speeds, and prioritizing public buses. One of our RAIL fellowship mentors, Prof. Subhasis Banerjee, has a moving writeup on him and his contrarian ideas which I’ve linked in the slides.

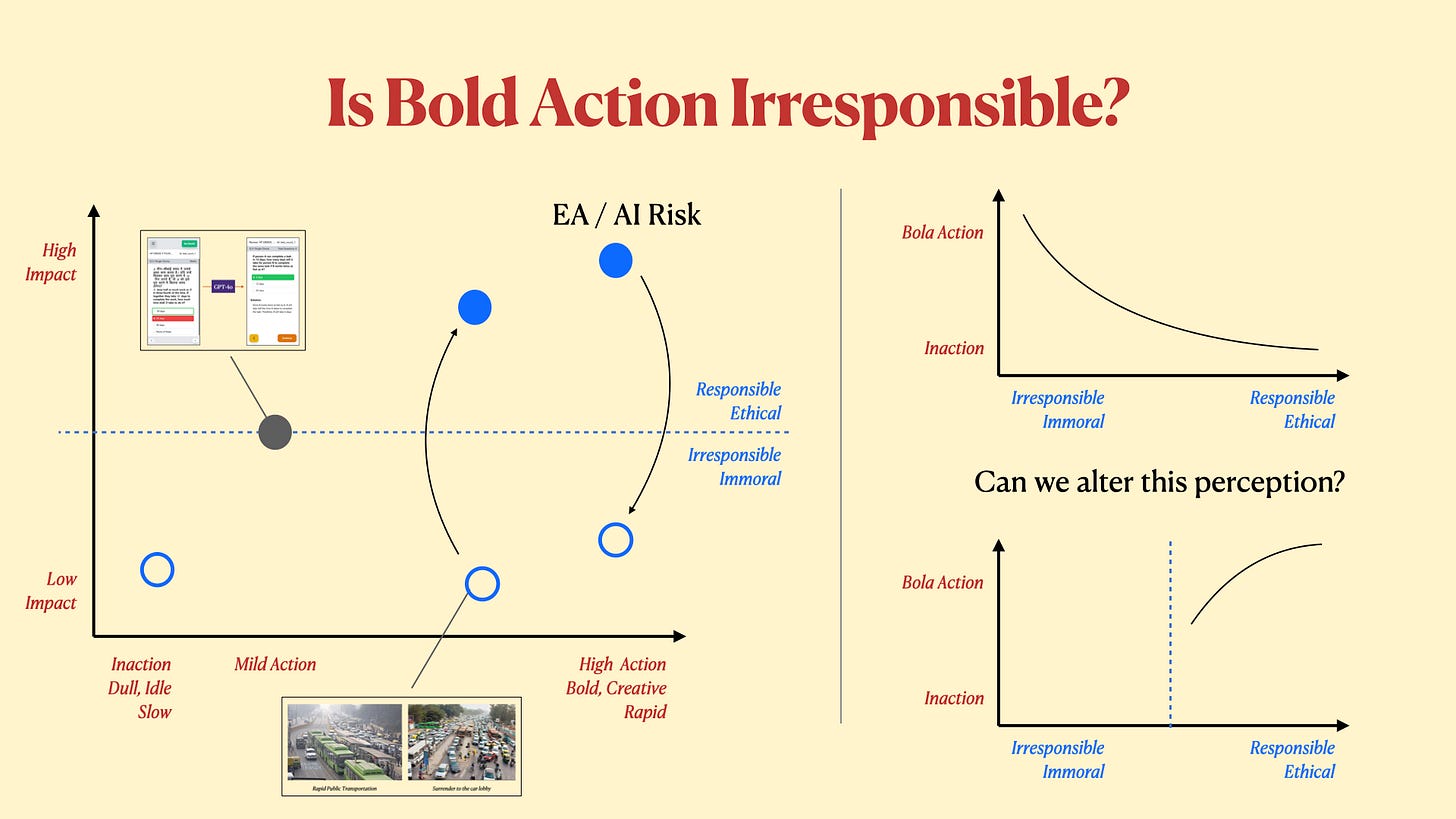

One of Prof. Mohan’s most ambitious projects, the Bus Rapid Transit (BRT) corridor in Delhi, faced severe backlash and eventually got scrapped in 2016 due to complaints from car users and challenges crossing roads.7

Critics accused him of stifling free will and undermining urban transport systems. But was he irresponsible? Or simply ahead of his time? This question bugs me because any disruptive idea, whether in technology or policy, often irritates us at first – it challenges us. But how do we judge whether it’s worth pursuing or not, especially through the lens of responsibility? Had Prof. Mohan’s BRT project succeeded, would critics praise his commitment to ethical values? Effectively, does impact blur our ideas of responsibility?

This slide is pretty unstructured at this point – but I’d like to draw your attention to the high-octane action around AI Risk. Several companies claim that they are being super responsible and saving the world by building large models. But what if it ends up in a hollow pursuit with no impact? Would we then call them irresponsible? Where does Avanti’s intervention, which I’d probably classify as mild action, stand here? Hollow? Or responsible?

There are too many moving pieces here. I believe we vastly overestimate our capacity to judge values like responsibility, morality, or ethical action. I’ll take another example of politicians. We often hear things like - “Oh, this MLA is a little bit corrupt, but he sent his gundas to get my land deal fixed, so he’s impactful, and I’m gonna vote for him.” How much of immorality is allowed to offset impactful action?

The right side of the slide is also superficial, but it reflects a common belief among many tech workers: that as we become more responsible, the number of guardrails will increase, ultimately leading to paralysis, with little to no action or painfully slow progress. This mindset is evident in the frustration many feel toward regulations like GDPR and the constant barrage of cookie consent banners.

The result is often a false binary: either we build nothing, trapped by the weight of ethical scrutiny, or we charge forward recklessly, ignoring all guardrails entirely.

I believe this perspective needs a fundamental shift. Responsibility doesn’t have to stifle creativity — it can be a springboard for bold and innovative action. In fact, I strongly believe that the more responsibly we act, the more imaginative and daring our solutions can become.

To summarize, I’ll read out the broad questions:

(1) Does impact blur our sense of responsibility?

(2) Are we too critical, pausing action unnecessarily?

(3) Or too hysterical, rushing into AGI risk debates?

(4) More importantly, Is this moral calculus too nauseating for tech workers?

Maybe I’m being unduly pessimistic, but when working on large, impactful projects – clashes with norms seem inevitable. Initiatives like RAIL can play a crucial role here, helping us navigate these tensions thoughtfully, without losing the appetite for action.

Throughout the RAIL Fellowship, I’ve been fortunate to engage in diverse and inspiring conversations within the cohort. Jigar from ArtPark emphasized the importance of rigorous testing and validation before deploying AI systems. Sai from Digital Green shared insights into the value of rapid experimentation and iterative learning, encouraging me to see action as a crucial step toward meaningful innovation. Meanwhile, Amrita from Armman and Sweety from SNEHA Foundation illustrated the delicate balance required in the context of maternal health tools, where the margin for error is slim, and the need for ethical foresight is paramount.

I believe these discussions have helped us learn from each other – in exploring what’s worth the risk, what’s genuinely good, and what limits we should respect.8 Hopefully, the values we’ve arrived at can inspire bold and creative action for the entire community.

Thank You.

Acknowledgements

I’m grateful to Sasha John and the DFL team for providing feedback on earlier iterations of the talk.

If you spot any errors, have suggestions for improvements, or wish to contribute to our repositories, please reach out to us on Avanti’s Discord channel: https://discord.gg/aNur9VPS2r

"Ethics Guidelines for Trustworthy AI" by the European Commission's High-Level Expert Group on AI (2019)

Mohammad, Saif M. "Ethics sheet for automatic emotion recognition and sentiment analysis." Computational Linguistics 48.2 (2022): 239-278

Nithya Sambasivan, Erin Arnesen, Ben Hutchinson, Tulsee Doshi, and Vinodkumar Prabhakaran. 2021. “Re-imagining Algorithmic Fairness in India and Beyond.” (FAccT '21).

Azra Ismail and Neha Kumar. “AI in Global Health: The View from the Front Lines.” CHI (2021).

Dan Meyer, “The Kids That Edtech Writes Off ”. (2024).

Andy Matuschak. “Ethics of AI-based invention: a personal inquiry”. (2024).

TIWARI, GEETAM. “Metro Rail and the City: Derailing Public Transport.” Economic and Political Weekly, vol. 48, no. 48, 2013, pp. 65–76. JSTOR, http://www.jstor.org/stable/23528925. Accessed 26 Nov. 2024.

RAIL Fellowship can be seen as an example of a meta-social good project. For a longer discussion, please refer to Prof. Aaditeshwar Seth’s book “Technology and (Dis) Empowerment: A Call to Technologists”.